Artificial Intelligence (AI) Pedagogy

Artificial Intelligence (AI) Decision-Making

IMAGE: DALL·E 3 via Copilot, prompt: “robot working at a computer in the style of 1950’s comic book art” (12/31/23).

Since late 2022, when OpenAI widely released Chat-GPT, there has been time to get more “comfortable” – or at least more familiar – with mainstreamed Artificial Intelligence (AI). Excitement over AI in Higher Education is tempered by the dramatic impact it’s having on the teaching and learning process. The dust has not yet settled, and no one can accurately predict to what extent Artificial Intelligence will transform the learning process.

There are many approaches to dealing with AI’s presence in the classroom, and what is shared here contends that a middle-ground solution exists. A hybrid approach in which AI can be infused, at suitable levels, within cognitive academic tasks – without dramatically overhauling current practices.

STARTING OUT . . .

The beginning of a new semester is a great time to have an honest conversation about Artificial Intelligence with students. Such a discussion not only addresses the particular role AI will play academically in their course(s) but also:

- Generates an appreciation of the positives and negatives associated with any technology

- Highlights the rapidly-evolving “growth phase” of AI

- Brings attention to the ethical debates surrounding potent technologies

- Reveals the difficult process of adapting and integrating new and unfamiliar technologies into any workflow or profession

- Encourages reflection on the personal use of technology

- Demonstrates that real-world issues are often not “black and white”

- Acknowledges engaging with different opinions and positions (on any topic) is a means to intellectual growth, reflection, inclusion, and validation

A benefit for learners engaging with AI is that they will be able to:

- Critically evaluate the technology’s strengths and weaknesses

- Select/apply the most appropriate tool(s) for a given situation

- Assess the credibility/accuracy of AI-generated content

- Evaluate the usefulness and applicability of AI-generated content

- Acknowledge how AI reliance affects skills development

- Deduce that AI has implications on the future that no one can accurately predict

FIRST CONSIDERATIONS

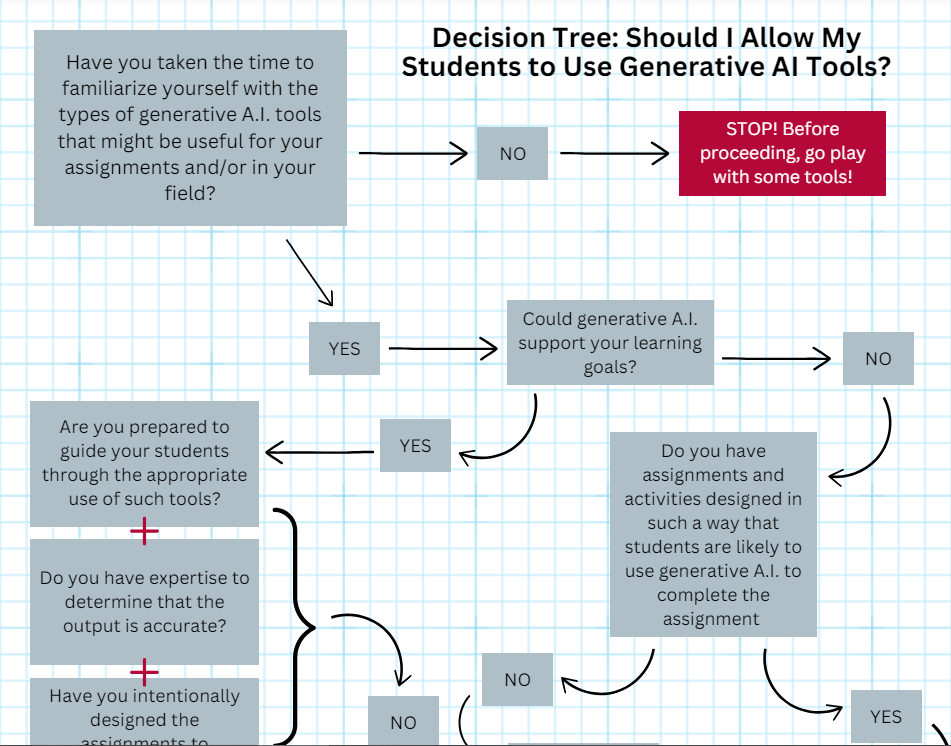

How can faculty begin thinking about Artificial Intelligence’s proper role(s) in Higher Education? Let’s start with some foundational considerations answered by the following questions!

#1: Do we really have to discuss pedagogy because students are all on board with AI, aren’t they?

The recent (May 2024) report “AI and Academia: Student Perspectives and Ethical Implications,” reveals growing concern about AI’s impact on learning. 43% of the 1,300+ college-bound high school students interviewed said they “believe using [AI] tools contributes to a significant decline in critical thinking and creativity”; 40% think that “using AI is a form of cheating” and that “AI tools contribute to misinformation.”

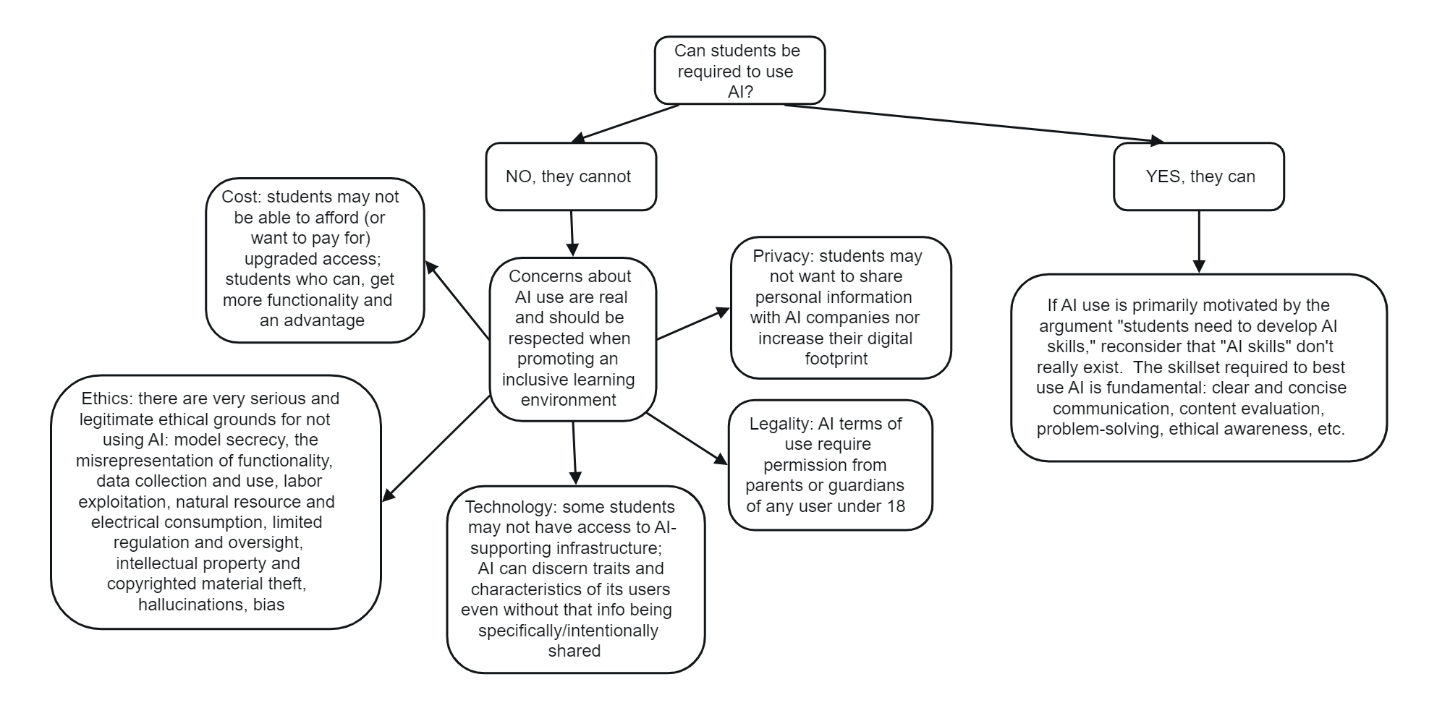

#2: Can I require my students use AI?

The answer is no, you can’t. Why not? Concerns regarding AI use are legitimate and should be respected to promote an inclusive and equitable learning environment.

Figure 1 Considerations associated with “Can I require my students to use AI?”

Some of the challenges posed by AI include:

- Cost: students may not be able to afford (or want to pay for) upgraded access; students who can pay may be getting an inequitable advantage from more functionality and improved performance

- Ethics: there are very serious and legitimate ethical grounds for not using AI: model secrecy, the misrepresentation of functionality, data collection and use, labor exploitation, natural resource and electrical consumption, limited regulation and oversight, intellectual property and copyrighted material theft, hallucinations, and bias

- Technology: some students may not have access to AI-supporting infrastructure or are unfamiliar with how the technology works

- Legality: AI terms of use require permission from parents or guardians of any user under 18. Using an AI permission form can address this (the shared document comes from North Carolina’s “Generative AI Implementation Recommendations and Considerations for PK-13 Public Schools” publication: 01/16/24, Page 9)

- Privacy: students may not want to share personal information with AI companies nor increase their digital footprint; AI can discern traits and characteristics of its users even without that information being explicitly or intentionally shared

A BLUNT APPRAISAL

If an instructor is induced to require AI in their courses based upon the argument, “Students need to develop AI skills,” reconsider that “AI skills” don’t really exist!

In accordance with the position that AI doesn’t necessitate revolutionary changes to Higher Education, “AI skills” should be framed as the application of the foundational skills every student should possess: an attention to detail, the ability to think critically and solve problems, flexibility and curiosity, clear communication. Further, the average non-computer science, non-robotics, non-programming student is not going to develop in-depth insight or advanced proficiency on the fundamentals and complexities of Artificial Intelligence by prompting Google’s Gemini or Anthropic’s Claude.

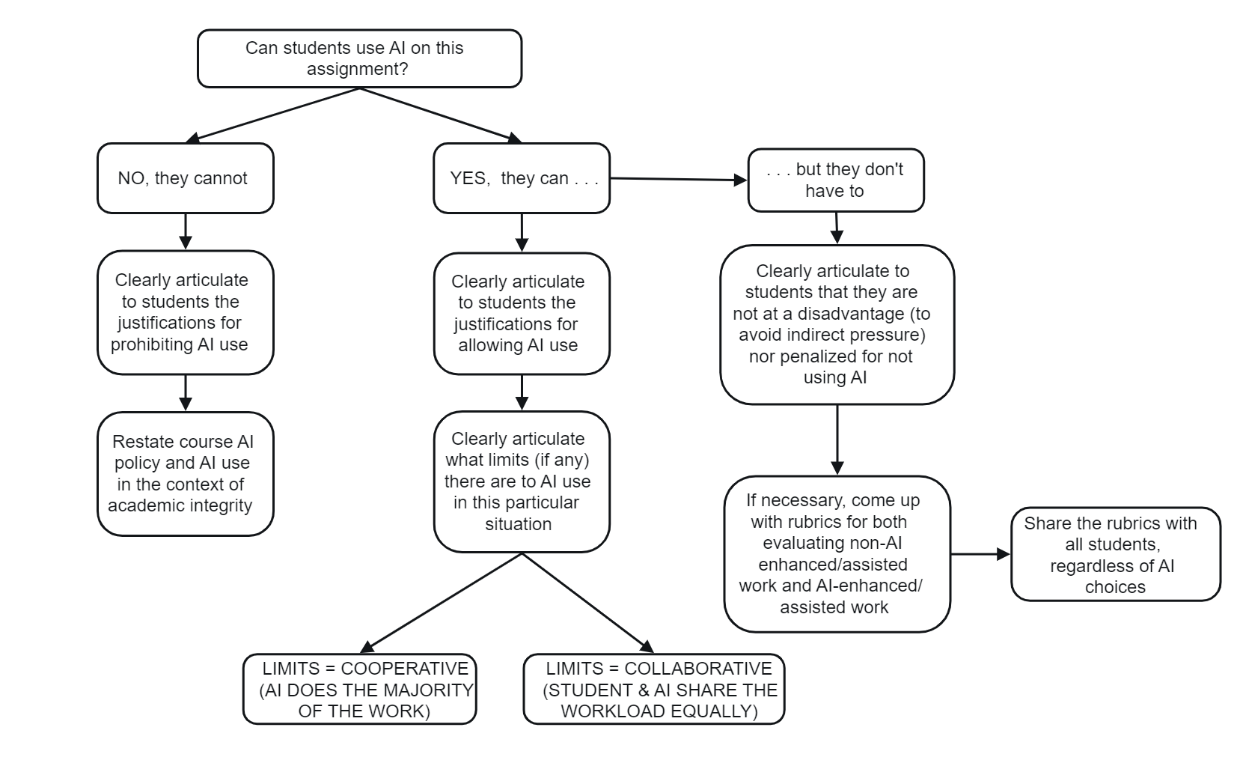

#3: What should I do once I have decided on the use of AI in my classes?

STUDENT-FACING

When establishing your AI limits, consider taking the following steps if AI use is not allowed:

- Clearly articulate to students the justifications for prohibiting AI use

- Explain AI’s limitations within the context of a discipline or profession

- Frame the course’s AI policy in the context of academic integrity

However, if AI use is allowed, these are some appropriate actions:

- Clearly articulate to students the justifications for allowing AI use

- Explain AI’s limitations within the context of a discipline or profession

- Frame the course’s AI policy in the context of academic integrity

- Determine the acceptable levels of AI use (this most likely will differ according to task, assignment, or project)

- o Cooperative = AI does the majority of the work

- o Collaborative = student and AI share the workload equally

- o Determining contribution levels/percentages/amounts is not an exact (or easy) science, but a good rule of thumb is to ask yourself, “Am I having a student perform the detailed, complex, time-consuming task, or is this being passed on to AI?”

INSTRUCTOR-FACING

If students make the choice to bypass AI – even when allowed – and complete work on their own, an instructor must keep in mind that non-AI work will:

- Not be produced as quickly as AI work or in as great a quantity (or length)

Figure 2 Considerations associated with “Can students us AI on this assignment?”

- Not contain as much multi-media (AI can easily produce videos, animation, music, sounds, and images)

- Not be as dynamic (AI can generate mind maps, working computer programs, timelines, spreadsheets, etc.)

- Not appear as polished/refined

- Not come across as confidently or assured as AI work

- Not be as correct (in most cases) in terms of grammar, vocabulary, translation, or coding

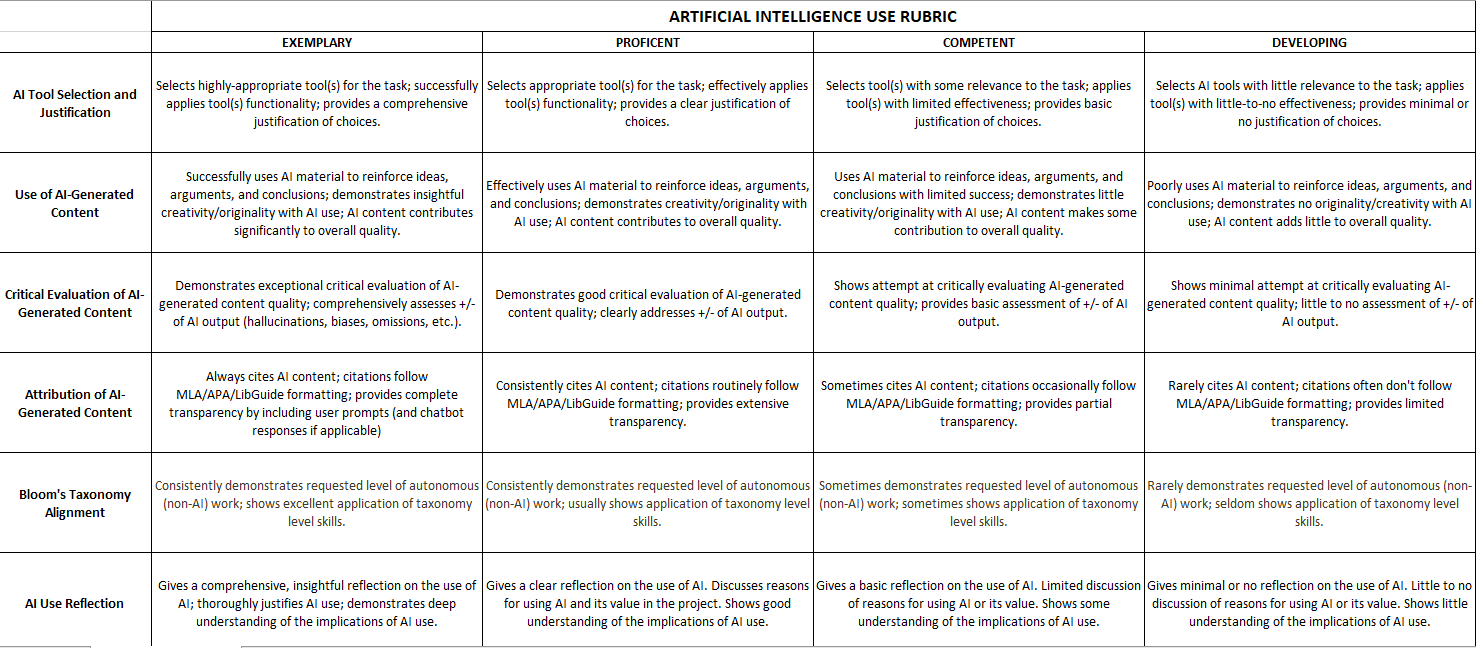

Also, faculty should develop rubrics (or additional rubric categories) for evaluating both non-AI enhanced/assisted work and AI-enhanced/assisted work. Share those rubrics with all students, regardless of their AI choices.

PEDAGOGY WHEN PROHIBITING AI USE

Academic freedom awards faculty members choice when determining whether or not to incorporate Artificial Intelligence (AI) into their teaching, so it’s possible a single department, discipline, or course will have great variation in how AI is leveraged to promote learning.

If the decision is made that AI is not going to be allowed to complete coursework, then certain questions need to be answered as a means of ensuring an instructor is ready to enforce their policy.

#1: What first steps should I take if I don’t allow AI in my classes?

A great place to start is developing class policies and methodologies that can reduce the temptation and/or appeal of using Artificial Intelligence (AI) from the first day!

Some proposals include:

- Share AI use limitations and follow-up measures for suspected violations of academic integrity

- Discuss your institution’s academic integrity policy with students, with an emphasis on how it applies to Artificial Intelligence

- Supplement AI use discussions with personal/professional justifications (to foster transparency and communication)

- Explain the limitations, restrictions, and/or complications of using AI in your discipline and/or profession as well as the benefits of not using AI

- Ensure homework policies accommodate late submissions or provide a grace period

- Approach any first concerns over prohibited AI use as a way to foster dialogue with the student so you can articulate your concerns

- Require work be completed with oversight: within a physical classroom or testing center, via Lockdown Browser Respondus monitor, or through a ZOOM meeting (when applicable)

#2: Should I include an Artificial Intelligence use statement in my syllabi?

Absolutely! And a syllabus should include a statement regardless of an instructor’s inclusion or exclusion of AI in their course.

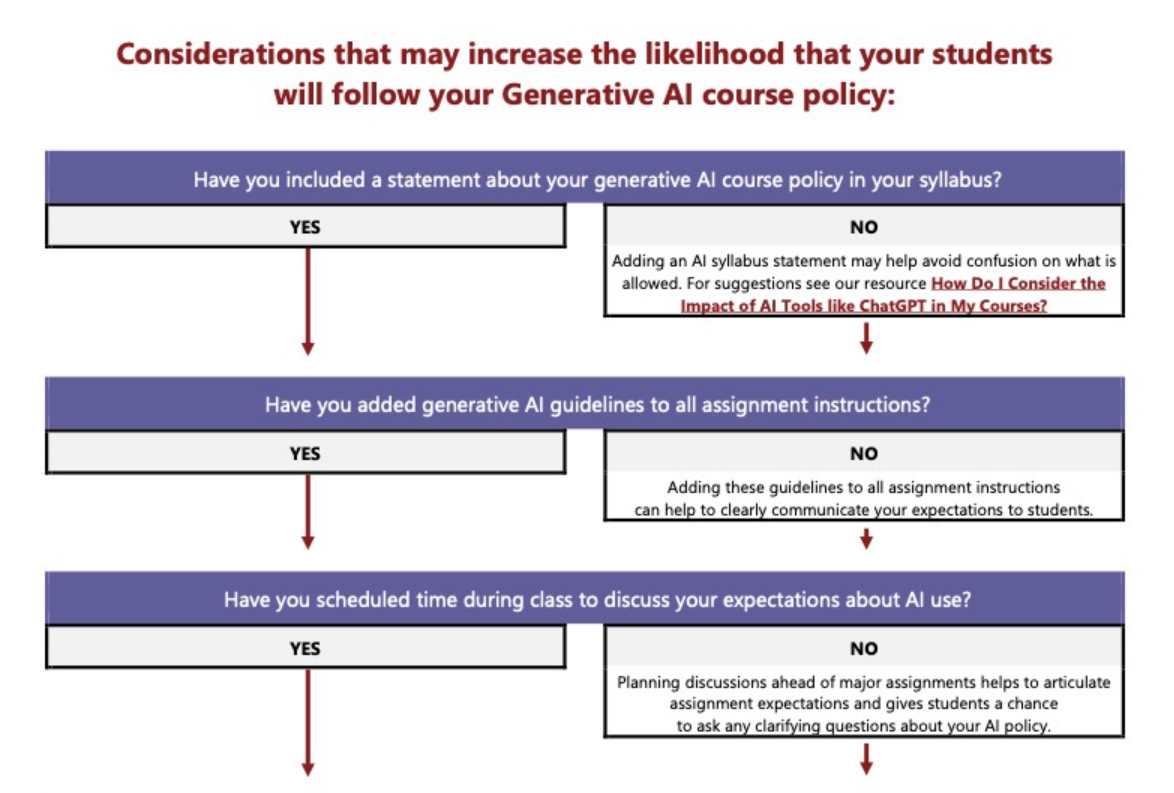

Surveys reveal students are confused about how they are allowed to use Artificial Intelligence to complete their coursework. They are also unclear about what AI-use cases may potentially violate academic integrity policies. Therefore, a syllabus policy, supported by assignment-specific AI directions, reduces learner anxiety, confusion, and frustration.

COURSE-LEVEL SYLLABI POLICIES

A comprehensive “Generative AI” use policy includes:

- The instructor’s professional philosophy regarding AI use in Higher Education

- Details on how AI can and cannot be used in the course

- The follow-up steps that will be taken when concern is raised over violations of academic integrity (Do students get a warning? Do students earn a zero? Do students get to resubmit their work?)

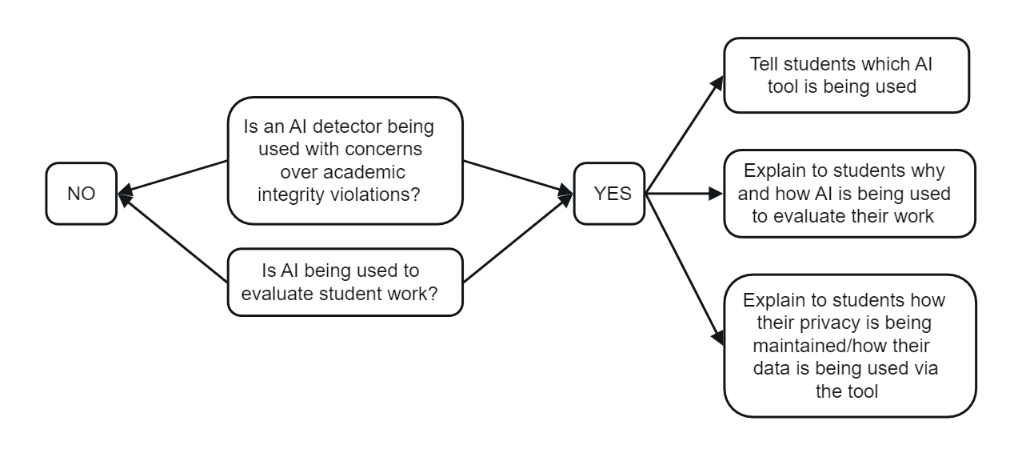

- Which AI detection tools, if any, will evaluate student work in cases of concern over potential academic integrity violations, as well as how will the tool’s reporting be interpreted and used for decision-making*

- Which AI tools, if any, will evaluate student work and/or provide constructive feedback*

*If an institution lacks a formal agreement with an AI software developer, students need to be informed how their work is being used by the AI company (Does their work train the LLM? How long is their work saved? Is their work reviewed by 3rd-parties?).

For more information on AI detectors, visit An Instructor’s Artificial Intelligence (AI) Handbook.

Figure 3 Considerations associated with using AI detectors and AI tools to evaluate student work.

Using plagiarism software to assess a student’s work differs from using Artificial Intelligence because:

- There is a well-established tacit agreement that coursework may be evaluated for plagiarism and that this process does not infringe upon intellectual property rights

- Plagiarism software companies have formal agreements with colleges and universities and abide by academic privacy policies such as FERPA

- The evaluative information shared by plagiarism detectors is standardized across a campus (faculty do not use the same AI detection tools – and often refer to more than one; those platforms differ regarding assessment approaches and conclusion analysis)

#3: What are some of the best techniques for reinforcing my AI prohibition policy?

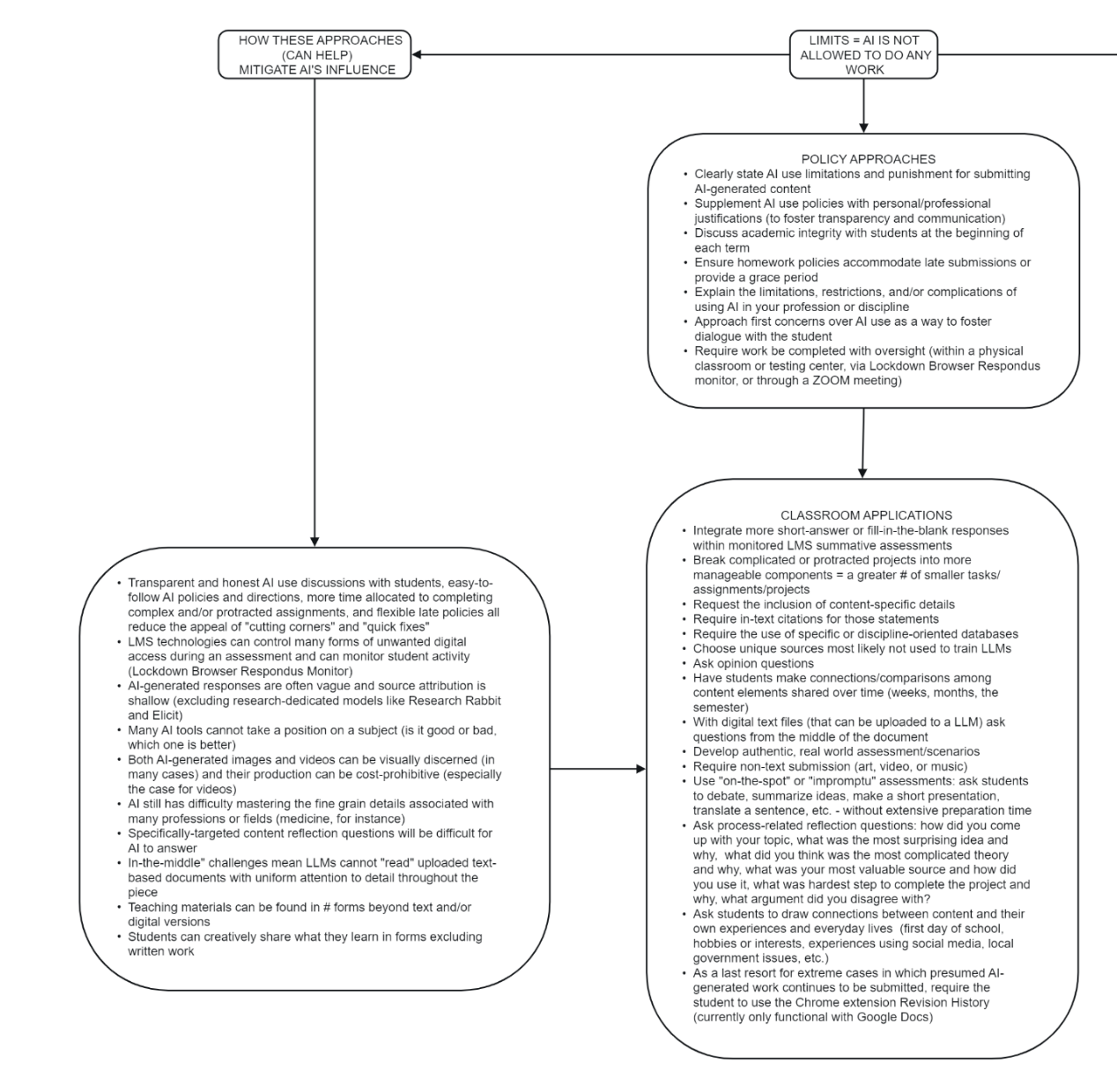

While there is no guarantee AI cannot find its way around these recommendations, they’re still worth trying! (Refer to Figure 4)

CLASS LEVEL

- Clearly state AI use limitations and punishment for submitting AI-generated content

- Supplement AI use policies with personal/professional justifications (to foster transparency and communication)

- Discuss academic integrity with students at the beginning of each term

- Ensure homework policies accommodate late submissions or provide a grace period

- Explain the limitations, restrictions, and/or complications of using AI in your profession or discipline

- Approach first concerns over AI use as a way to foster dialogue with a student

- Require work be completed with oversight (within a physical classroom or testing center, via Lockdown Browser Respondus monitor, or through a ZOOM meeting)

COURSEWORK LEVEL

- Integrate more short-answer or fill-in-the-blank responses within monitored LMS summative assessments

- Break complicated or protracted projects into more manageable components = a greater # of smaller tasks/assignments/projects

- Request the inclusion of content-specific details, examples, and supporting information

- Mandate in-text citations for detail-specific statements

- Require the use of specific or discipline-oriented databases

- Choose unique sources most likely not used to train LLMs

- Ask students to respond to opinion questions

- Have students make connections/comparisons among content elements shared over time (weeks, months, the semester)

- With digital text files (that can be uploaded to an LLM) ask questions from the middle of the document

- Develop authentic, real-world assessments/scenarios

- Require non-text submissions (art, video, or music)

- Use “on-the-spot” or “impromptu” assessments: ask students to debate, summarize ideas, make a short presentation, translate a sentence, etc. – without extensive preparation time

- Ask process-related reflection questions: How did you come up with your topic? What was the most surprising idea and why? What did you think was the most complicated theory and why? What was your most valuable source and how did you use it? What was the hardest step to complete the project and why? What argument did you disagree with?

- Ask students to draw connections between content and their own experiences and everyday lives (first day of school, hobbies or interests, experiences using social media, local government issues, etc.)

- As a last resort for extreme cases in which presumed AI-generated work continues to be submitted, you can consider requiring a student to use the Chrome extension Revision History (currently only functional with Google Docs)

#4: What are the underlying assumptions behind these recommendations?

Many of the recommendations capitalize on the existing limitations and weaknesses of Large Language Models (LLMs).

The justifications include:

- Transparent and honest AI use discussions with students, easy-to-follow AI policies and directions, more time allocated to completing complex and/or protracted assignments, and flexible late policies all reduce the appeal of “cutting corners” and “quick fixes”

- LMS technologies can control many forms of unwanted digital access during an assessment and can monitor student activity (Lockdown Browser Respondus Monitor, for instance, can record a student’s test-taking session and flag suspicious activity)

- AI-generated responses are often vague and source attribution is shallow (excluding research-dedicated models like Research Rabbit and Elicit, which are trained to provide detailed source information regarding journal and peer-reviewed material)

- Many AI tools still cannot take a position on a subject (Is it good or bad? Which one is better?)

- Both AI-generated images and videos can be visually discerned (in many cases) and their production can be cost-prohibitive (especially the case for videos)

- AI still has difficulty mastering the fine grain details associated with many professions or fields (medicine, for instance)

- Specifically-targeted content reflection questions will be difficult for AI to answer

- In-the-middle” challenges mean LLMs cannot “read” uploaded text-based documents with uniform attention to detail throughout the piece

- Teaching materials can be found in numerous forms beyond text and/or digital versions

- Students can creatively share what they learn in forms excluding written work

PEDAGOGY WHEN ALLOWING AI USE

When students are allowed to use Artificial Intelligence (AI), attention should be paid to guaranteeing AI has a measured role in the learning process.

Key concerns regarding the extent of AI’s influence over coursework include:

- Maintaining a student’s active engagement in, and control over, their learning

- Ensuring the technology does not assume too many essential tasks associated with the learning process

- Guaranteeing the integrity of course learning objectives (e.g. making sure assessments evaluate a student’s achievement of learning objectives rather than AI’s)

#1: What first steps should I take if I allow AI in my classes?

STEP 1: Keep in mind that due to legitimate personal and ethical concerns, it’s a best practice not to require AI use.

STEP 2: Employ approaches supporting the appropriate and responsible use Artificial Intelligence; for instance:

- Outline course AI use parameters/conditions with students at the start of the term (via a syllabus policy and intentional discussion)

- Adjust course competencies/sub-competencies to accurately reflect reduced student contributions (applicable in cases of cooperative AI use – elaboration follows)

- Create opportunities to complete any given task or project both with and without AI

- Guarantee equitable performance evaluation by developing grading rubrics (or additional rubric criteria) that incorporate AI use

Figure 4 Considerations associated with prohibiting AI use to complete coursework.

For additional insights, read “Why Do Students Cheat?” from the University of Minnesota and “What do AI chatbots really mean for students and cheating?” from Stanford’s Graduate School of Education.

STEP 3: Decide the extent of AI’s role within particular assignments.

ASSIGNMENT-LEVEL AI POLICIES

In accordance with Transparency in Learning and Teaching in Higher Education (TILT Higher Ed) principles, directions explaining AI use parameters and/or conditions should be included in every assignment for which AI is permissible. This is especially important when instructors allow different degrees of AI involvement – depending up the nature of a project.

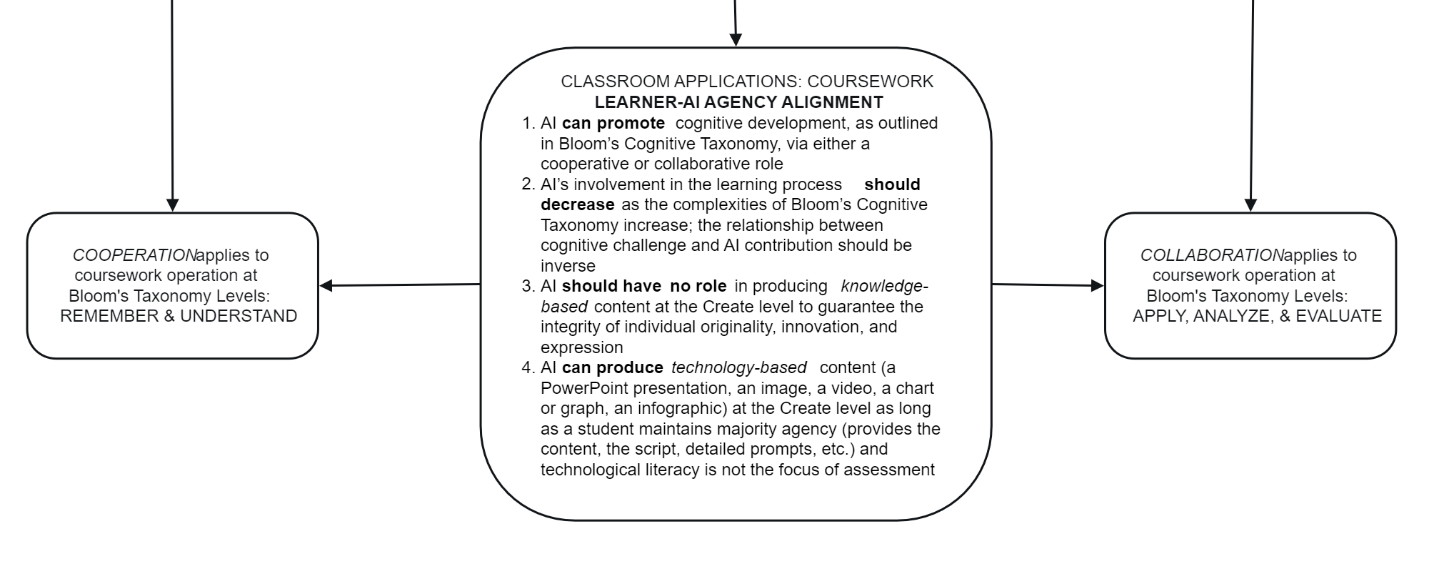

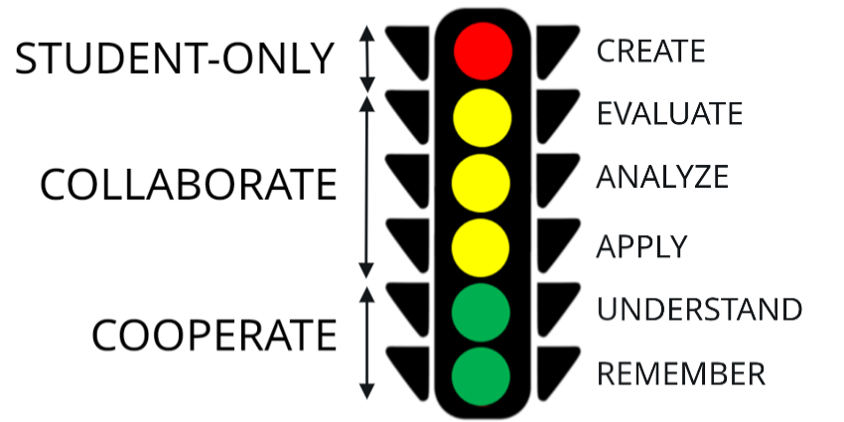

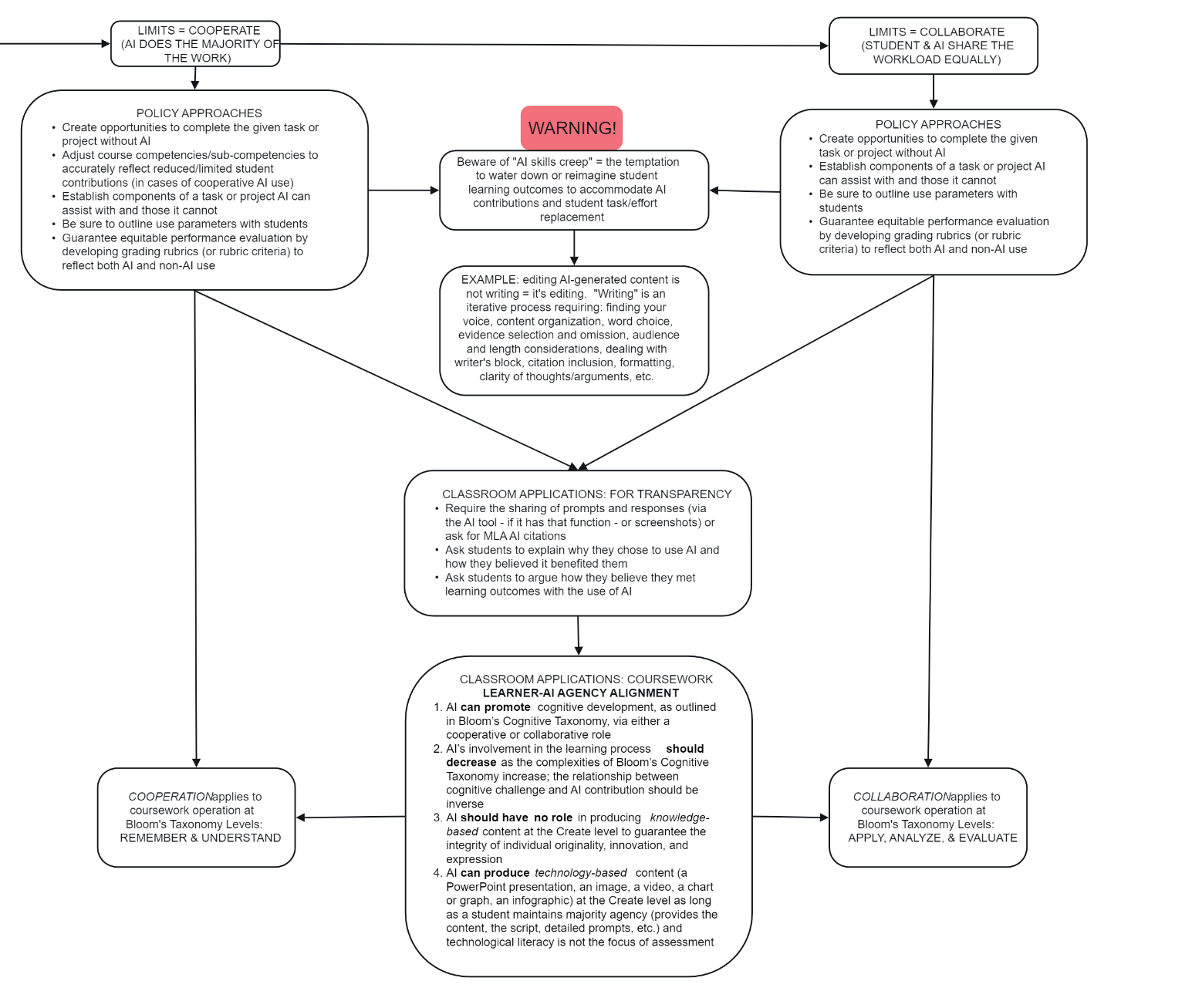

When determining AI’s contributions to the learning process (i.e. the degree of student assistance it provides), consider the progression of development outlined in Bloom’s Cognitive Taxonomy. AI’s involvement in the learning process should decrease as the demands of Bloom’s Cognitive Taxonomy increase; the relationship between cognitive challenge and AI contribution should be inverse.

Accordingly, AI’s influence on, and contribution to, knowledge acquisition can be expressed along a spectrum of involvement (from more to less) broken down into either cooperative or collaborative.

“Cooperative” indicates students and AI do not share the academic workload equally = AI takes on more of the responsibility. This working relationship is applicable for lower cognitive challenges within Bloom’s Taxonomy: REMEMBER and UNDERSTAND.

“Collaborative,” on the other hand, infers students and AI share the workload equally. This relationship is applicable for upper-level cognitive exercises within that Taxonomy: APPLY, ANALYZE, and EVALUATE. While equal effort supports the official definition of “collaborate,” it’s clear that in practice learners should bear more of the learning heavy-lifting when engaged in more advanced cognitive skills development.

#2: How can I foster positive student-AI interaction?

Promote transparency! Ask students to properly disclose the contributions of others = it’s a great practice to instill and is applicable to AI use and beyond . . .

- Require the sharing of chatbot prompts and AI responses

- o Some tools have a sharing feature built-in (e.g. Google Gemini)

- o If those features don’t exist, ask students to take and include screenshots

- o Request formal MLA AI citations

- Have students explain why they chose to use AI and how they believe it benefited them

- Ask students to argue how they believe AI assisted them in meeting learning objectives

Promote formative assessment! Students may be unsure how Artificial Intelligence can help them master concepts, ideas, skills, etc. Give them some suggestions, including asking AI to:

- Restate or rephrase complicated ideas or theories

- Provide explanations without jargon or for a particular level of experience or knowledge

- Generate review questions for a given subject or content area

- Engage in debate as a way to develop critical thinking and reasoning skills

- Share constructive feedback on a particular piece of writing

#3: Is there anything else I should consider if I allow AI to assist my students?

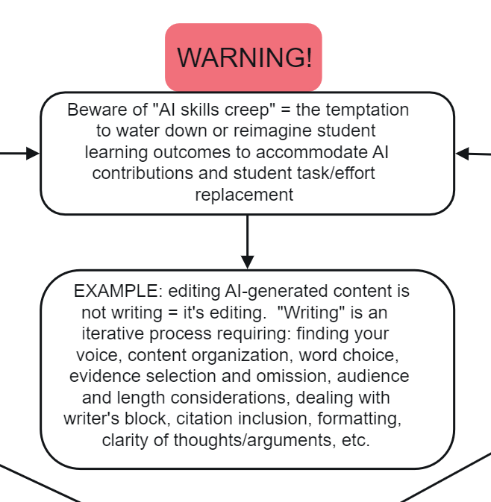

Beware of “AI skills creep” = the temptation to water down or reimagine student learning outcomes to accommodate AI contributions and student task/effort replacement.

AI-SKILLS CREEP: THE MAIN CHALLENGE TO LEARNING

While AI can play a constructive role in Higher Education, its contribution needs to be measured against potentially negative impacts. For example, when vital learning activities get pawned off to the technology, this significantly undermines a student’s engagement in the learning process. Undoubtedly, AI generates a lot of excitement, but educational quality should not suffer because we get caught up in the hype or jump on the bandwagon.

Figure 5 AI “skills creep” warning.

The threat materializes when AI is too quickly and excessively integrated into the curriculum and is allowed to do too much of the heavy lifting for students (i.e. “AI-skills creep”). Lowering expectations of student performance undermines their skill/knowledge acquisition. AI-skills creep can be seen in an extreme example taken from English composition.

Learning objective: through drafting an expository essay, students will demonstrate the ability to craft a clear and concise thesis statement, develop well-organized paragraphs with relevant evidence, and utilize transitions to create a logical and cohesive essay that effectively argues a central point.

Learning assessment: students will prompt AI to write an expository essay on a topic of their choice and then edit the essay in accordance with the elements of quality writing outlined in the learning objective.

Are students learning how to write in this scenario? What is actually happening?

- The learning objective is unintentionally watered down/revised/modified with the incorporation of AI’s contribution

- AI is shouldering the fundamental activities necessary for genuine learning

- Learning objective assessment is misrepresentative since it now reflects minimized student effort and reduced skills development

This example sidelines students from the process, thereby assigning them the role of editor not writer.

By its nature, learning how to write is time consuming, difficult, and often frustrating. It requires mastering many different skills including organization, analysis, understanding audience and tone, articulating ideas clearly, editing, revising, in addition to knowledge of correct grammar, punctuation, and spelling, etc. Allowing AI to write for students bypasses all of these—it is impossible to be a good editor without understanding how writing is created in the first place!

Further, students lose agency over their own learning and creation in the example. Their academic “product” should be a personal artifact unique to them that shares knowledge they have gained, how they processed it, and how they decided to share it, explain it, interpret it, etc. Simply put, AI writing is not student writing.

LEARNER-AI AGENCY ALIGNMENT

For anyone seeking to integrate AI into their curricula, the million-dollar question is, “How can Artificial Intelligence best assist learners?” Difficulty answering this stems from concern over excessively infusing AI into the learning process to the point it detrimentally impacts a student’s development of essential knowledge and skills. So how can faculty determine the proper role(s) of AI within their courses?

Learner-AI Agency Alignment (LAAA) applies degrees of Artificial Intelligence involvement to Bloom’s Cognitive Taxonomy. “Agency” calls attention to ownership – which entity, AI or a student, owns (i.e., controls, dictates) the particular academic task? LAAA proposes that the extent of AI agency should be adapted to the particular Taxonomy level being assessed.

Learner-AI Agency Alignment argues:

- AI can promote cognitive development, as outlined in Bloom’s Cognitive Taxonomy, via either a cooperative or collaborative role

- AI’s involvement in the learning process should decrease as the complexities of Bloom’s Cognitive Taxonomy increase; the relationship between cognitive challenge and AI contribution should be inverse

- AI should have no role in producing knowledge-based content at the Create level to guarantee the integrity of individual originality, innovation, and expression

- AI can produce technology-based content (a PowerPoint presentation, an image, a video, a chart or graph, an infographic) at the Create level as long as a student maintains majority agency (provides the content, the script, detailed prompts, etc.) and technological literacy is not the focus of assessment

Figure 6 Defining cooperation and collaboration; outlining the key principles of Learner-AI Agency Alignment.

The goal of the Learner-AI Agency Alignment is to provide guidance and clarity for instructors on the extent to which AI should assist students within their coursework, should they choose to allow the use of AI in any capacity.

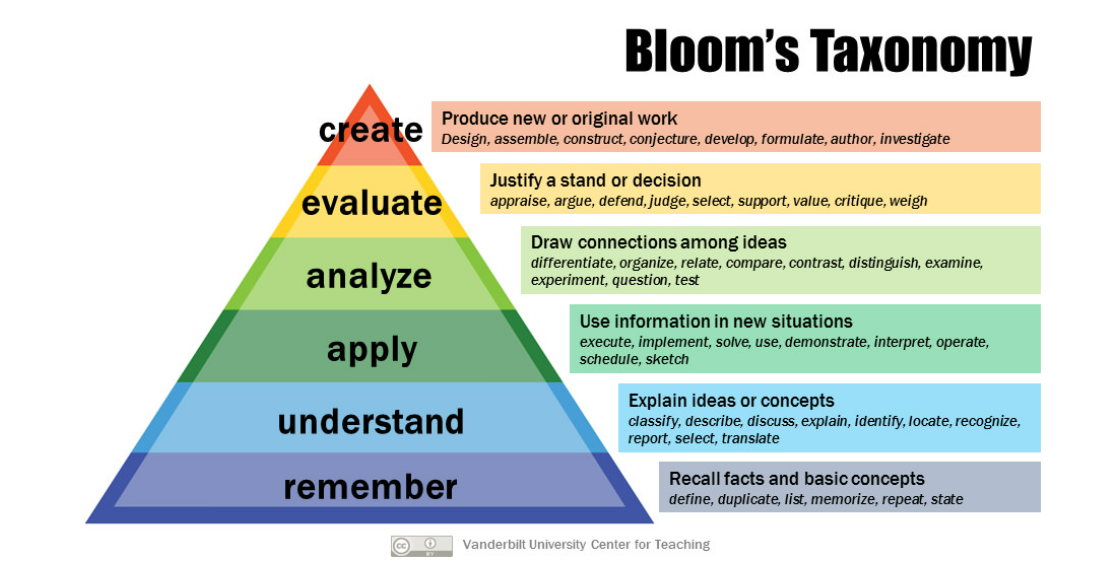

BLOOM’S TAXONOMY

Bloom’s Cognitive Taxonomy categorizes knowledge-based skills by complexity, progressing from the least demanding (remembering) to the most demanding (creating). It allows instructors to design activities that target specific degrees of higher-order thinking skills, thereby fostering deeper student learning and more precise assessment design.

Bloom’s Cognitive Taxonomy levels are as follows:

- REMEMBER: recall factual information without necessarily understanding its deeper meaning (list the names of the Great Lakes)

- UNDERSTAND: grasp the meaning of information by explaining, summarizing, or interpreting it in your own words (explain the process of photosynthesis)

- APPLY: use acquired knowledge and skills to solve problems in new situations (use the concept of supply and demand to explain higher food prices)

- ANALYZE: break down information into its parts, examining relationships, and drawing evidence-based conclusions (analyze the strengths and weaknesses of nuclear power)

- EVALUATE: make informed judgments about the value, credibility, or effectiveness of information or ideas (evaluate scientific evidence for the effects of second-hand smoke)

- CREATE: generate new ideas, products, or solutions by using acquired knowledge and skills (design a rainwater collection system for arid regions)

Bloom’s Cognitive Taxonomy, permission to use from Vanderbilt University, Center for Teaching

DEFINING AI’S ROLE: COOPERATIVE OR COLLABORATIVE?

When determining AI’s contribution to the learning process (i.e. the degree of student assistance it provides), consider the evolution of cognitive development as outlined in Bloom’s Cognitive Taxonomy. AI’s involvement in the learning process should decrease as the demands of Bloom’s Cognitive Taxonomy increase; the relationship between cognitive challenge and AI contribution should be inverse.

Accordingly, AI’s influence on, and contribution to, knowledge and skills acquisition can be expressed along a scale of involvement: from more AI = cooperative to less AI = collaborative.

Cooperative: students and AI do not share the academic workload equally = AI takes on more of the responsibility, but not all of it. This working relationship is applicable for lower-level cognitive challenges within Bloom’s Taxonomy: REMEMBER and UNDERSTAND.

Collaborative: students and AI share the workload equally. And while equal effort aligns with the official definition of “collaborate,” it’s clear that in practice students should bear more of the academic heavy-lifting when undertaking advanced cognitive skills work. This relationship is applicable for upper-level cognitive exercises within that Taxonomy: APPLY, ANALYZE, and EVALUATE.

Figure 7 Illustrating AI’s collaborative or cooperative role in association with Bloom’s Cognitive Taxonomy.

WHY “CREATE” IS OFF LIMITS TO AI

“Create” in Bloom’s Taxonomy represents the highest order of cognitive skills. It asks students to apply original thinking, resourcefulness, and problem-solving. Learners must draw upon their own interpretations, perspectives, opinions, and originality. The process of creation is yet another level of learning involving trial and error, reflection, and refinement. Allowing AI to produce knowledge-based content at this level deprives students the opportunity to engage in the most challenging, and rewarding, aspect of education.

PROMOTING STUDENT CREATIVITY IN AN AGE OF AI

The dramatic expansion of AI into more aspects of our daily lives, including education, may be overwhelming to learners. Further, it may also be disheartening. Therefore, promoting the importance of independent thinking, problem-solving, and creative expression is key. How can instructors promote creativity in a Higher Education space impacted by Artificial Intelligence?

- Encourage students to view AI as an assistive tool

- Explain the importance of maintaining engagement and agency over all levels of technology use

- Discuss the value, challenges, and rewards associated with self-expression

- Explain how exposure to different perspectives foster self-reflection, increases understanding, widens perspectives, and enriches society

- Design projects applying human characteristics like empathy, self-reflection, resilience, comparative thinking, and cultural understanding

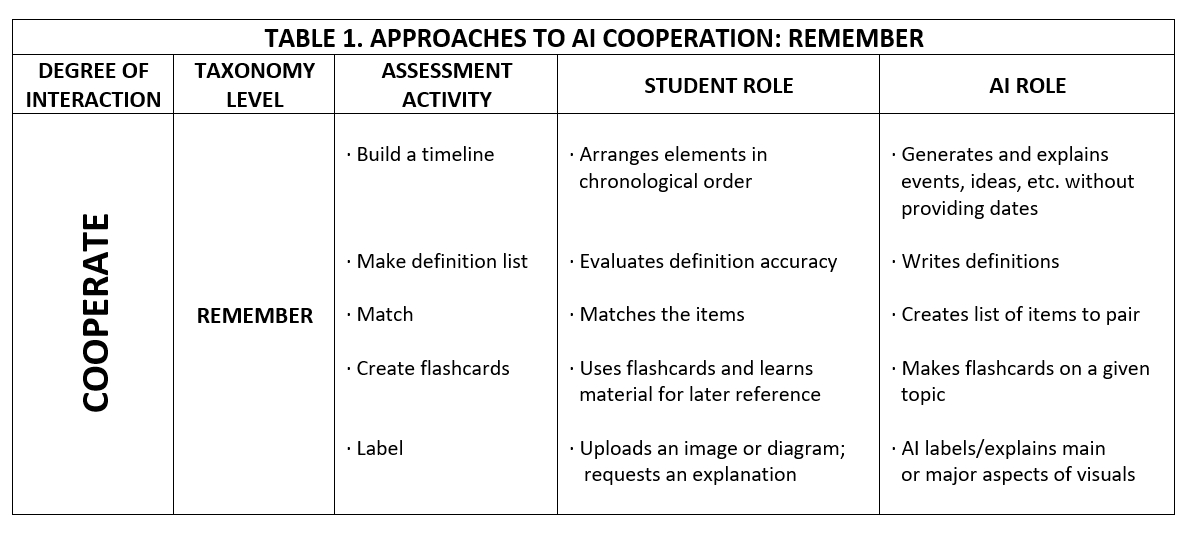

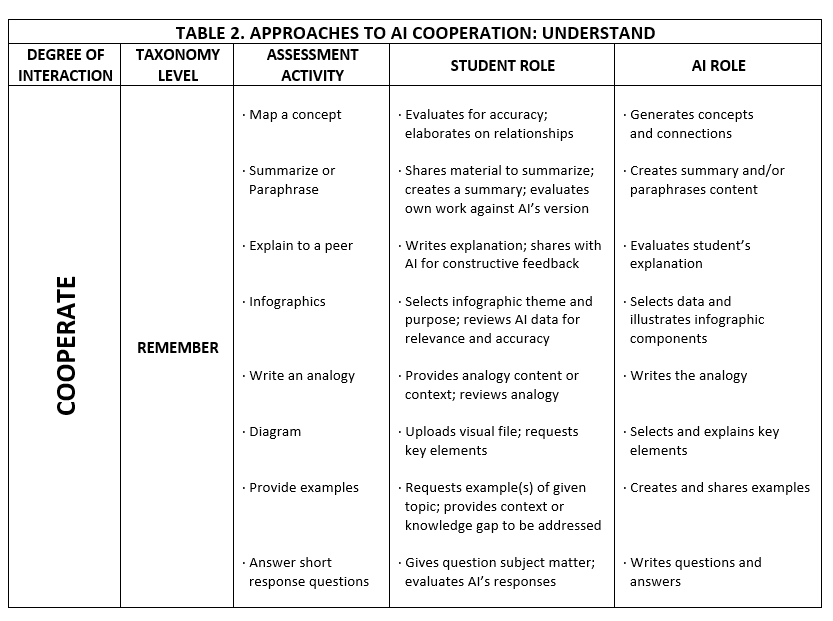

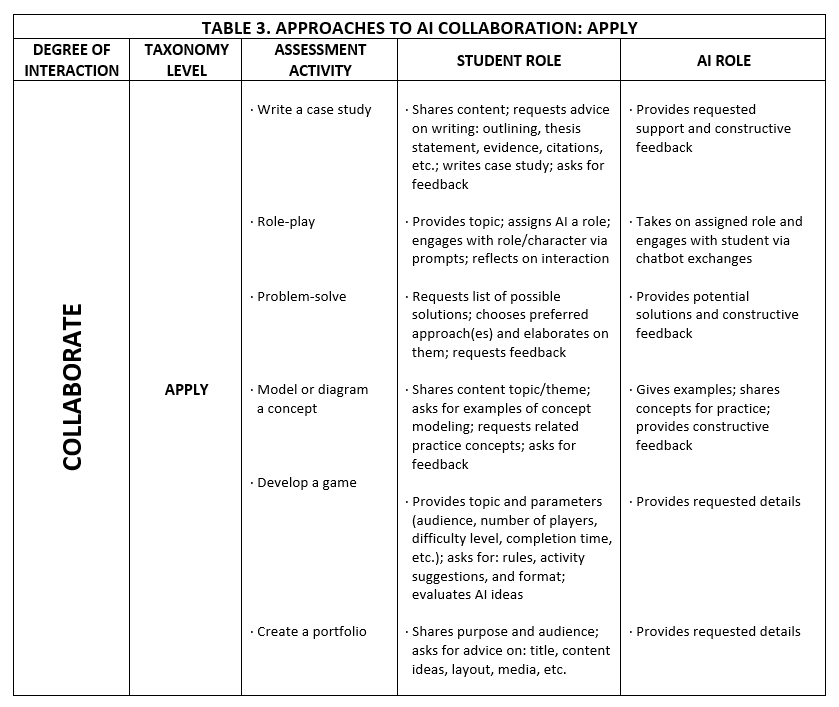

COOPERATION AND COLLABORATION IN APPLICATION

Maintaining the integrity of the learning process in an age of AI is possible by managing the extent of AI’s contributions or assistance. However, determining the allowable levels of AI participation in a learning task is nuanced and challenging.

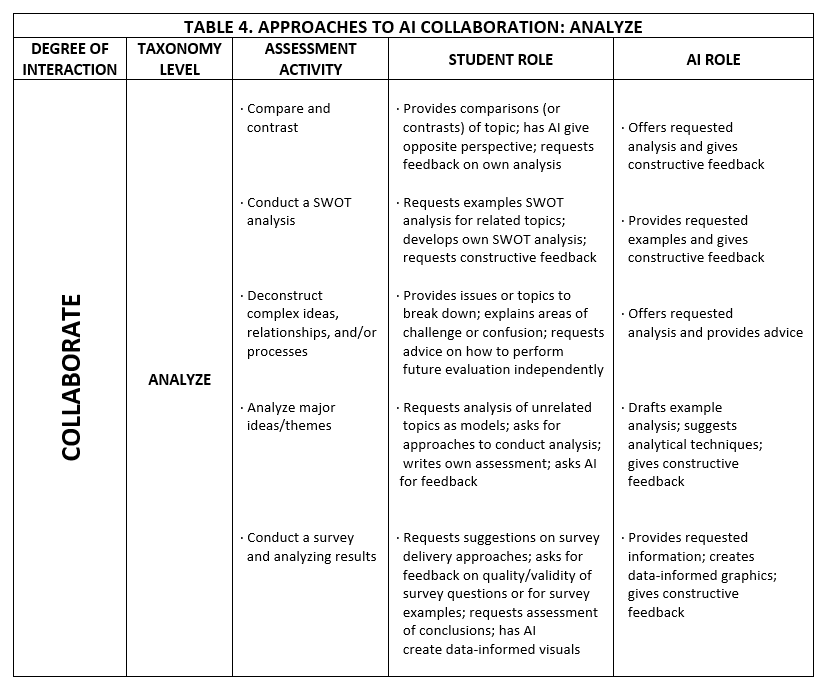

The shared tables provide suggestions on appropriate boundaries for assessment completion for the Taxonomy levels REMEMBER, UNDERSTAND, APPLY, ANALYZE and EVALUATE.

It is assumed that the nature of the assessments referenced in the tables are: structured (formative or summative), required, evaluated by an instructor, and contribute to a student’s overall grade.

Figure 8 Student and AI roles in conjunction with Taxonomy level and assessment activity.

Figure 9 Considerations regarding AI use.

ASSESSMENT AND EVALUATION

How can educators ensure students are mastering material when AI is involved in the learning process? A digital AI use grading rubric is available.

Fortunately, integrating Artificial Intelligence into the curriculum doesn’t require a complete overhaul of existing student learning outcomes! Outcome integrity is maintained since AI provides nuanced support for higher-order cognitive tasks and students still (should) undertake the more demanding academic responsibilities. Moreover, even when AI plays a significant role in lower-level cognitive processes – such as those associated with REMEMBER and UNDERSTAND – it doesn’t inhibit a student’s from achieving the learning outcome.

The shared course student learning outcomes reflect cognitive tasks associated with particular levels of Bloom’s Taxonomy – progressing from less demanding to more complex. The examples of AI’s application demonstrate how learning outcomes can be successfully attained while permitting AI assistance.

REMEMBER

Introduction to Criminal Justice: Identify several career options in criminal justice.

How to cooperate A: Students prompt AI for a list of careers related to criminal justice and asks for a brief description of their duties/responsibilities. The student journals whether they are interested in pursuing any of those roles and explains their thinking.

How to cooperate B: Students share with AI what their interests are in relationship to criminal justice, or to future employment in general, and asks AI to determine what potential careers in criminal justice could be based on those preferences.

UNDERSTAND

International Relations: Differentiate diverse values, aspects of international law and dimensions of justice in the international community.

How to cooperate A: Students prompt AI for a list of elements and explanations, as well as examples of how they differ. The student reviews that information against reliable sources (notes, handouts, documentaries, textbook, etc.).

How to cooperate B: Students tell AI to conduct their exchange as if the student were a courtroom lawyer arguing a case and asks for an explanation of a significant international legal case. The student then independently discerns its key parts and requests AI’s feedback on their interpretations.

Learning Outcomes with AI Contributions

REMEMBER

- Introduction to Criminal Justice: Identify several career options in criminal justice.

- How to cooperate A: Students prompt AI for a list of careers related to criminal justice and asks for a brief description of their duties/responsibilities. The student journals whether they are interested in pursuing any of those roles and explains their thinking.

- How to cooperate B: Students share with AI what their interests are in relationship to criminal justice, or to future employment in general, and asks AI to determine what potential careers in criminal justice could be based on those preferences.

- How to cooperate C: Students share with AI their favorite police/crime movie, tv or streaming series and asks AI to discern the different criminal justice careers associated with main characters.

- International Relations: Describe the core components and definitions of power and of security dilemmas.

- How to cooperate A: Students prompt AI for a list of elements making up power and security challenges as well as their definitions. The student reviews that information against reliable sources (notes, handouts, documentaries, their textbook, etc.).

- How to cooperate B: Students share with AI one of their favorite spy or espionage tv series or film and asks AI to frame its descriptions of the components of power and security dilemmas within the context of that media. The student also asks for definitions and then reviews that information against reliable sources (notes, handouts, documentaries, their textbook, etc.).

UNDERSTAND

- International Relations: Differentiate diverse values, aspects of international law and dimensions of justice in the international community.

- How to cooperate A: Students prompt AI for a list of elements and explanations, as well as examples of how they differ. The student reviews that information against reliable sources (notes, handouts, documentaries, textbook, etc.).

- How to cooperate B: Students tell AI to conduct their exchange as if the student were a courtroom lawyer arguing a case and asks for an explanation of a significant international legal case. The student then independently discerns its key parts and requests AI’s feedback on their interpretations.

- How to cooperate C: Students share an issue of social justice important to them and asks AI to analyze it within the context of writing a grant proposal for funding to redress some of its root causes and significant implications (key components).

APPLY

- Macro Economics: Use economic modeling techniques to describe changes in macroeconomic measurements.

- How to collaborate A: Students give AI examples of economic indicators and asks for examples on how to evaluate them using economic modeling. The student then provides AI with their own analyses of additional indicators and requests feedback.

- How to collaborate B: Students tell AI they are a candidate running for a prominent office and need to explain the nature of the current economy (regional, local, or national) to a general audience. They ask AI to suggest a few economic indicators to address, draft their “speech,” and then request AI’s feedback of their accuracy and clarity of the communication piece.

- Social Psychology: Use scientific reasoning to interpret social psychological phenomena.

- How to collaborate A: Students ask AI for examples of social psychological phenomena. The student explains the phenomena using their knowledge of scientific reasoning and requests feedback.

- How to collaborate B: Students prompt AI to create a challenging or complicated social scenario and place the student within that context. The student applies scientific reasoning to explain the nature of their situation and then requests AI’s feedback on their interpretations.

- Social Problems: Apply the major theoretical perspectives to contemporary social problems.

- How to collaborate A: Students ask AI for examples of social problems. The student utilizes theoretical perspectives to explain and evaluate those issues and requests feedback.

- How to collaborate B: Students request AI provide an example of particular social problem of personal interest (e-waste, minimum wage increases, the lack of affordable housing). The student utilizes theoretical perspectives to explain and evaluate the issues and requests feedback.

ANALYZE

- US History Since 1877: Critically review main issues and controversies that emerged from the post-Reconstruction period.

- How to collaborate A: Students prompt AI to share examples of the main issues and controversies associated with the post-Reconstruction period of American History. The student asks AI for best practices in evaluating content constructively and then applies those recommendations to their own assessments. They request feedback.

- How to collaborate B: Students tells AI they are assuming the role of a freelance researcher who writes popular histories. The student asks AI to provide a list of issues and controversies from the period which they use as source material to draft a mock “chapter” of analysis. They request feedback.

- US History Since 1877: Establish connections between conceptual issues and real historical events.

- How to collaborate A: Students prompt AI for a set list of thematic, social, political, etc. issues related to a given historical events. They also request a few examples on how to argue and justify connectivity. They student determines the relationships for the remaining events and can ask AI for feedback on the quality of their justifications.

- How to collaborate B: Students ask AI to find and share a piece of popular culture associated with a historical event. The student explains its relationship to key themes, ideas, justifications, etc. from the period and requests feedback.

- International Relations: Analyze nationalism and transnational ideas and ideologies as well as contemporary challenges and global responses.

- How to collaborate: Students prompt AI for a set list of thematic, social, political, etc. issues related to a given historical events. They also request a few examples on how to argue and justify connectivity. They student determines the relationships for the remaining events and asks AI for feedback on the quality of their justifications.

EVALUATE

- Macro Economics: Compare and contrast the effects of governmental economic policies.

- How to collaborate B: Students ask AI to debate the effects of governmental economic policies; AI argues against the policy while the student argues in favor of the policy, emphasizing what may have happened without it.

- US History Since 1877: Compare and contrast the impact of post-Reconstruction events on present-day institutions, ideologies, and issues.

- How to collaborate: Students compare and contrast current-day institutions, ideologies, and/or issues and connects them to post-Reconstruction events. They then request AI critically evaluate their logic.

- International Relations: Compare and contrast various classic and contemporary perspectives and levels of analysis used in studying international politics.

- How to collaborate A: Students provide AI with explanations arguing on how the elements are either are similar or dissimilar and asks AI to elaborate on the counter analysis. The student evaluates AI’s arguments and asks for feedback on the quality of their perspectives.

Sources and Additional Readings

- A Teacher’s Guide to Bloom’s Taxonomy, innovativeteachingideas.com

- Hamsa Bastani, Generative AI Can Harm Learning

- Chris Berdik, What aspects of teaching should remain human?

- Bloom’s Taxonomy, Bloomstaxonomy.net

- Bloom’s Taxonomy, Vanderbilt University, Center for Teaching

- Bloom’s Taxonomy, Wikipedia

- Bloom’s Taxonomy explained with examples for educators, Flocabulary by Nearpod

- Bloom’s Taxonomy Learning Activities and Assessments, University of Waterloo

- Bloom’s Taxonomy, Revised for 21st-Century Learners, University of Utah, Center for Teaching & Learning Excellence

- Bloom’s Taxonomy “Revised”: Key Words, Model Questions, & Instructional Strategies, Indiana University-Purdue University Indianapolis

- How Bloom’s Taxonomy Can Help You Learn More Effectively, verywellmind.com

- A.J. Juliani, A.I. isn’t the thing. It may be the thing that gets us to the thing.

- Revised Bloom’s Taxonomy, Iowa State University, Center for Excellence in Learning and Teaching

- Stefania Giannini, Generation AI: Navigating the opportunities and risks of artificial intelligence in education

- The double-edged sword of AI in education, Brookings Institute

Aids for AI Decision-Making

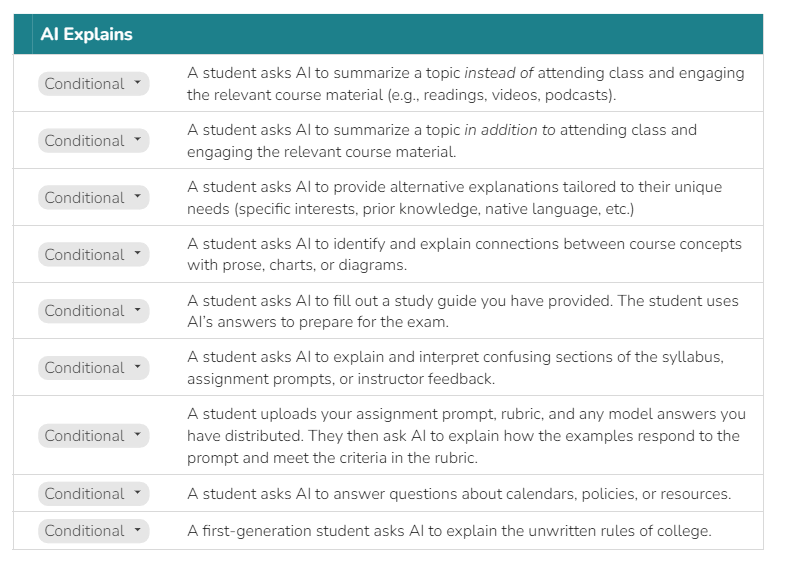

Take some time to reflect on various AI-use scenarios thanks to the “AI Expectations Workshop” (shared via the AI in Education Google group; adapted by Betsy Barre; based on work from Ronni Gura) as a way of brainstorming your Artificial Intelligence parameters.

Want some creative help deciding where you stand on Artificial Intelligence use in your course? Temple University’s Center for the Advancement of Teaching has a visual decision-making tree (highlighted below) that may be of assistance!

What steps can you take to guarantee the success of your AI policy once you’ve established it? The University of Massachusetts Amherst’s Center for Teaching and Learning has a useful flowchart guiding readers through some essential considerations necessary for upholding AI use policies; interactive and accessible versions are also available.

Interested in creating a visual spectrum of allowable AI use in your course? Ditchthattexbook.com, creator of the “Classroom AI Use” infographic below, shares an adaptable template via Google Docs.

Are You AI-Ready?

Can you answer “yes” to the following questions regarding AI policies in your classes? If so, then you’re well on your way to fostering a transparent learning environment for your students!

- Do course syllabi include a statement about AI use?

- Am I personally, and professionally, comfortable with the AI limits/conditions I’m allowing?

- Are time and resources (classroom discussions and support materials) being dedicated to discussing AI class-related issues with students?

- If AI use is permitted, do assignment directions explain the expectations and limitations of that assistance?

- If AI use is permitted, can an assignment also be successfully completed without using AI?

- Have rubrics, or other evaluative materials, been created for evaluating AI-assisted coursework?

- If an AI detector is going to be employed, which detection tool will it be? How will its results/conclusions be used to address violations of academic integrity?