Concerns and Controls

Concern: AI and FERPA

Information on AI and FERPA is currently quite limited; below is information from independent research (from a non-legal expert).

FERPA: Family Educational Rights and Privacy Act (1974)

- (2011) Updates: Institutions that are FERPA compliant:

- o Can adopt limited directory information to share with specific parties, for specific purposes, or both (without consent)

- o “[A]re responsible for using reasonable methods to ensure that their authorized representatives comply with FERPA”

- o “[M]ust use a written agreement to designate an authorized representative (other than an employee)”

AI AND FERPA: SCENARIOS

- Can faculty submit student work to AI detectors – without their institution owning a license for the service?

- o The Supreme Court ruled neither homework nor classwork are part of a student’s educational record, therefore they are not protected by FERPA (Are Student Files Private? It Depends; Owasso Independent School Dist. No. I-011 v. Falvo, 534 U.S. 426, 2002). The grades on that work, however, are protected by FERPA as part of a student’s educational record

- o In light of the above, if all identifiable information about a student (name, grade, course, student identification number, etc.) has been removed from that work, then Personally Identifiable Information (PII) is being protected

- Can faculty require students to create AI accounts in order to complete homework? Can faculty require students to use AI in order to complete homework?

- o Setting up an AI account requires the sharing of information classified as PII. However, some of that information may be disclosed without concerns of FERPA violation if the institution has declared it Directory Information

- o Mainstream AI platforms lack transparency in regards to data sources, data collection, data mitigation, and data use (The Foundation Model Transparency Index)

- o Using AI models removes any school/district oversight and/or control over potentially-shared PII data

- o Pew Research has shown “[t]eens are sharing more information about themselves on social media sites than they did in the past.” Some privacy experts have raised concerns the sharing of PII could bleed into AI model use

- o The study “Beyond Memorization: Violating Privacy Via Inference With Large Language Models” revealed AI models can infer personal information about users even if they do not provide them. Thus, it’s technically possible for AI models to deduce aspects of a student’s PII

- Can students under 18 years of age interact with AI models?

- o The terms-of-use shared below (from mainstream AI models) expect adult-aged users; they allow under-age users with parental/guardian approval

- Claude2 (Anthropic) = at least 18 years old

- Bard (Google) = 18 years and older

- Bing (Microsoft) = at least 13 years old; if under 18, parental or guardian consent expected

- OpenAI (ChatGPT; DALL·E) = at least 13 years old; if under 18, parental or guardian consent expected

- Perplexity (Perplexity AI) = at least 13 years old; if under 18, parental or guardian consent expected

- personal.ai (Human AI Labs Inc.) = 16 years of age

- Pi (Inflection AI) = “our services are not intended for minors under the age of 18”

- Poe (Quora) = at least 18 years old

- o The terms-of-use shared below (from mainstream AI models) expect adult-aged users; they allow under-age users with parental/guardian approval

DATA SHARING/DISCLOSURE VIA ONLINE EDUCATIONAL SERVICES

FERPA-RELATED

“Schools and districts will typically need to evaluate the use of online educational services on a case-by-case basis to determine if FERPA-protected information (i.e., PII from educational records) is implicated. If so, schools and districts must ensure that FERPA requirements are met.” (Page 3, Protecting Student Privacy While Using Online Educational Services: Requirements and Best Practices)

Institutions may work with third party educational tech providers if:

- “Metadata that have been stripped of all direct and indirect identifiers are not considered protected information under FERPA because they are not PII.” (Page 3); this process is referred to as “de-identifying”

- When creating user accounts, information students share may fall under the “directory information” exemption – however, due to the ability to opt out, applying the “school official” exemption is preferred

- SUMMARY: “It is important to remember, however, that student information that has been properly de-identified or that is shared under the “directory information” exception, is not protected by FERPA, and thus is not subject to FERPA’s use and re-disclosure limitations.”

“The FERPA school official exception is more likely to apply to schools’ and districts’ use of online educational services. Under the school official exception, schools and districts may disclose PII from students’ education records to a provider as long as the provider:

- Performs an institutional service or function for which the school or district would otherwise use its own employees;

- Has been determined to meet the criteria set forth in in the school’s or district’s annual notification of FERPA rights for being a school official with a legitimate educational interest in the education records;

- Is under the direct control of the school or district with regard to the use and maintenance of education records; and

- Uses education records only for authorized purposes and may not re-disclose PII from education records to other parties (unless the provider has specific authorization from the school or district to do so and it is otherwise permitted by FERPA).” (Page 4)

Limits to data sharing:

- Tech service providers can only use student data to perform services explicitly outlined in their contracts

- Tech service providers cannot share (or sell) any FERPA-related information to another outlet or re-use it for other purposes “except as directed by the school and as permitted by FERPA” (Page 5)

Issues of data control (typically outlined via a contract or legal agreement, Page 9):

- “Make clear whether the data collected belongs to the school/district or the provider”

- “Be specific about the information the provider will collect”

- “Define the specific purposes for which the provider may use student information, and bind the provider to only those approved uses”

- “If student information is being shared under the school official exception to consent in FERPA, then it would also be a best practice to specify in the agreement how the school or district will be exercising “direct control” over the third party provider’s use and maintenance of the data”

- “Specify whether the school, district and/or parents (or eligible students) will be permitted to access the data (and if so, to which data) and explain the process for obtaining access”

PROTECTION OF PUPIL RIGHTS AMENDMENT (PPRA)-RELATED

PPRA prescribes:

- A school district “directly notify parents of students who are scheduled to participate in activities involving the collection, disclosure, or use of personal information collected from students for marketing purposes, or to sell or otherwise provide that information to others for marketing purposes, and to give parents the opportunity to opt-out of these activities.” (Page 6)

- “PPRA has an important exception, however, as neither parental notice and the opportunity to opt-out nor the development of adoption of policies are required for school districts to use students’ personal information that they collect from students for the exclusive purpose of developing, evaluating, or providing educational products or services for students or schools.” (Page 6)

- Parents have rights to review instructional materials

PPRA, which applies to all students until they turn 18, “is invoked when personal information is collected from a student.” (Page 6)

- “The use of online educational services may give rise to situations where the school or district provides FERPA-protected data to open accounts for students, and subsequent information gathered through the student’s interaction with the online educational service may implicate PPRA.” (Page 6)

- Whether FERPA and/or PPRA apply “depends on the content of the information, how it is collected or disclosed, and the purposes for which it is used.” (Page 6)

DEFINITIONS

FERPA defines Educational Records as: records directly related to a student that are maintained by an educational agency or institution or by parties acting for the educational agencies or institutions. The information can be in the form of (but not limited to) “handwriting, print, computer media, videotape, audiotape, film, microfilm, microfiche, and email.” (Studentprivacy.ed.gov)

FERPA defines Personally Identifiable Information (PII) as: identifiable information that is maintained in education records and includes direct identifiers, such as a student’s name or identification number, indirect identifiers, such as a student’s date of birth, or other information which can be used to distinguish or trace an individual’s identity either directly or indirectly through linkages with other information. (Studentprivacy.ed.gov)

- Name and address

- Name and address of parents or other family members

- Personal identifiers: Social Security number, student ID #, biometric records (facial characteristics, retina or iris patterns, voiceprints, DNA sequences, fingerprints, handwriting)

- Other indirect identifiers: date of birth, place of birth, mother’s maiden name

- Other information that could allow re-identification of a student with reasonable certainty

- Information requested by a personal who the school reasonably believes knows the identity of the student to whom the record relates

FERPA defines Directory Information as: information contained in an education record of a student that would not generally be considered harmful or an invasion of privacy if disclosed. (Studentprivacy.ed.gov)

- Student’s name

- Student’s address

- Date of birth and place of birth

- Photographs

- Participation in officially-recognized activities or sports

- Field of study

- Enrollment status

- Degree and awards

- An athlete’s height and/or weight

- Dates of attendance

- Most-recent school(s) attended

- Grade level

DATA SHARING/DISCLOSURE

FERPA’s Disclosure Provision allows the sharing of PII, without consent, under the following conditions:

- To school officials

- To other schools in which a student wishes to enroll

- To educational authorities conducting an audit

- For financial aid purposes

- For disciplinary proceedings

- For accreditation/audits and evaluations

- For parents of dependent students

- As directory information

- To comply with legal proceedings, and/or to comply with legal proceedings after making a “reasonable effort” to contact the student

- When immediate health or safety concerns are an issue

- For studies for or on behalf of an institution (developing, evaluating, or administering predictive tests; administering student financial aid, improving instruction)

FERPA’s Disclosure Provision allows the sharing of PII, if an institution gives public notice regarding:

- The types of PII designated as “Directory Information”

- The right to opt out of their information being shared

- The time period for opting out

Written agreements regarding data sharing:

- Must be in place when not working with official school/institution employees

- Must designate who an authorized representative of the school/institution is

- Must guarantee the entity disclosing PII uses “reasonable methods” to ensure, to the greatest extent, the authorized representative is FERPA compliant

AI PLATFORMS PRIVACY STATEMENTS

ADDITIONAL RESOURCES

- A Dangerous Inheritance: A Child’s Digital Identity

- Artificial Intelligence and the Future of Teaching and Learning

- Big Tech Makes Big Data Out of Your Child

- Children’s Digital Privacy

- Children’s Online Privacy Protection Rule

- Data Sharing Toolkit for Communities

- Is Your Use of Social Media FERPA Compliant

- Off Task!

- Office of Educational Technology

- Platform for Excellence in Teaching and Learning (Miami University)

- State Student Privacy Report Card

- Student Privacy Glossary (Studentprivacy.ed.gov)

- Student Privacy in the Digital Age

- What is an Education Record?

FERPA EXCERPT: § 99.31 Under what conditions is prior consent not required to disclose information?

(a) An educational agency or institution may disclose personally identifiable information from an education record of a student without the consent required by § 99.30 if the disclosure meets one or more of the following conditions:

(1) (i) (A) The disclosure is to other school officials, including teachers, within the agency or institution whom the agency or institution has determined to have legitimate educational interests.

(B) A contractor, consultant, volunteer, or other party to whom an agency or institution has outsourced institutional services or functions may be considered a school official under this paragraph provided that the outside party—

(1) Performs an institutional service or function for which the agency or institution would otherwise use employees;

(2) Is under the direct control of the agency or institution with respect to the use and maintenance of education records; and

(3) Is subject to the requirements of § 99.33(a) governing the use and redisclosure of personally identifiable information from education records.

(ii) An educational agency or institution must use reasonable methods to ensure that school officials obtain access to only those education records in which they have legitimate educational interests. An educational agency or institution that does not use physical or technological access controls must ensure that its administrative policy for controlling access to education records is effective and that it remains in compliance with the legitimate educational interest requirement in paragraph (a)(1)(i)(A) of this section.

Concern: AI Use and Academic Integrity

Students may ask why using Artificial Intelligence (AI) to complete their work violates academic integrity.

When Policy Is “No”

Faculty can explain that AI use violates Kirkwood’s academic integrity policy when the tasks AI performs on behalf of a student go against the stated/written policies of an instructor. At Kirkwood, individual faculty members determine the limits of AI use to complete assigned work. For instance, some instructors may allow students to use AI to generate outlines or thesis statements, others may not allow AI assistance in any form. When in doubt, a best practice is to clarify with your professor what you can and cannot use AI for.

If a course policy says Artificial Intelligence cannot be used to create student work, and a student uses AI to create work they then pass on as their own, it violates academic integrity because:

- Stated expectations of academic performance and behavior have been directly violated.

- It constitutes plagiarism. Plagiarism is defined as using the work of another entity, in this case, a Large Language Model (LMM), and passing it off as your own. Thus, using Artificial Intelligence to produce academic work that you claim is your own is unethical.

- It may be the theft of intellectual property. The current training procedures associated with mainstream LLMs are secretive. Most experts agree machine learning (ML) developers mine Internet content to refine AI algorithms. Some of that content is copyrighted or otherwise protected intellectual property (court cases regarding this unfair, and potentially illegal, use of material are underway). Thus, AI-generated content may be directly influenced by the work of others or potentially replicating it (i.e. stealing it) without permission.

When Policy Is “Yes”

If a course policy says Artificial Intelligence can be used to create student work, and a student uses AI to create work they then pass on as their own, it violates academic integrity because:

- Authorship and Attribution: The student has failed to abide by expectations of transparency by not crediting Artificial Intelligence for their work

Concern: Protecting Privacy = Chatbot Histories

A mainstream concern about AI developers is their unquenched thirst for user data! While completely maintaining digital privacy when using Artificial Intelligence tools is impossible, there are steps to mitigate the amount of information a user shares with AI companies:

Concern: The Ethics of Artificial Intelligence

Almost all comprehensive discussions of AI (at some point) include reference to “the ethical uses of AI.” Critiques may focus on secretive AI development, dataset bias, and hallucinations, but ethical concerns are much broader and more complex that what is typically highlighted. The categories below highlight numerous, and significant, drawbacks associated with the technology.

- FUNCTIONAL: Black-box (“secretive”) development, data set concerns (bias, misinformation remediation, source material, source collection, etc.), hallucinations, terms of use conditions, transparency

- PERSONAL: Do I feel comfortable: having to create user accounts, sharing my data, providing free labor (training the AI model through my interaction), handing over agency; am I concerned about: skill loss, AI dependency, the appropriateness of AI tools for certain users (under 18 years old), the overwhelming speed of change

- INSTITUTIONAL (EDUCATIONAL): Age-appropriate AI, district/institutional favoritism, issues of representation, use policies, enterprise costs, AI-related professional development availability, FERPA challenges, assessment and curriculum changes/updates

- FINANCIAL: Job displacement, worker replacement, pressures to upskill, cost concerns, concentrations of influence, monopolies, mergers, talent siphoning

- SOCIETAL: Limited digital literacy, growing digital divide, overall equitable use, data set/AI output biases, deep fakes, misinformation/fake news, concentrated AI knowledge, privacy loss, reliance on digital communication, copyright/intellectual property theft

- GOVERNMENTAL: Government oversight or a lack thereof, reactive regulation, official policy development, developer/corporate accountability, democratic stability, free press/speech, state-sponsored cyberattacks, digital infrastructure creation/maintenance

- GLOBAL: Consumption levels (water, electricity, natural resources – lithium), labor exploitation, developed world favoritism/biases, trade/component wars, state-sponsored cyber terrorism

The Ethics of Artificial Intelligence: Further Reading

FUNCTIONAL

- Microsoft AI engineer warns FTC about Copilot Designer Safety Concerns

- OpenAI signs open letter to build AI responsibly just days after Elon Musk sued the company for putting profit ahead of people

- AI Has a Problem with Gender and Racial Bias

INSTITUTIONAL (EDUCATIONAL)

- FERPA & AI: What is protected?

- Guidance for the Use of Generative AI

- How teachers make ethical decisions when using AI in the classroom

FINANCIAL

- How Klarna uses AI

- Klarna CEO: AI Efficiency Raises Societal Questions Beyond Job Replacement

- Chat GPT may be coming for our jobs. Here are the 10 role AI is most likely to replace

- AI could replace equivalent of 300 million jobs – report

SOCIETAL

- Researchers tested leading AI models for copyright infringement using popular books, and GPT-4 performed worst

- What Happens When Your Art Is Used to Train AI

- Political deepfakes are spreading like wildfire thanks to GenAI

- “AI will cure cancer” misunderstands both AI and medicine

- Microsoft begins blocking some terms that caused its AI tool to create violent, sexual images

GOVERNMENTAL

- India reverses AI stance, requires government approval for model launches

- House oversight leaders introduce government AI transparency bill

- Biden plans to step up government oversight of AI with new ‘pressure tests’

GLOBAL

- Generative AI’s environmental costs are soaring – and mostly secret

- As the AI industry booms, what toll will it take on the environment?

- AI’s Unsustainable Water Use: How Tech Giants Contribute to Global Water Shortages

- Clean tech: AI straining US energy supply

- AI likely to increase energy use and accelerate climate misinformation – report

- Amid explosive demand, American is running out of power

- How much energy does AI consume?

- The AI Boom Could Use a Shocking Amount of Energy

- The Hidden Costs of AI

- OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic

Control: AI Detection Software

Please refer to the discussion “AI Detectors: What to Know” in the chapter, AI Course Policies and Syllabi Statements.

Control: Classroom AI Management Techniques

It may be impossible to create AI-resistant materials (MagicSchool offers a tool for this) as the capabilities of Artificial Intelligence continue to evolve. Even so, if AI is not allowed, applicable, or useful for completing a particular assessment or project, these approaches may help reduce the appeal of using AI as well as its impact.

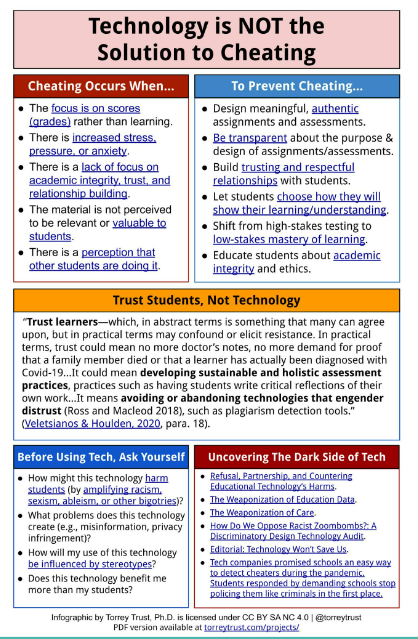

For another summation, read “Technology is NOT the Solution to Cheating” (screencaptured below).

Reconsiderations in the Age of AI

Is it possible to avoid requiring students completing all their work in class right in front of the instructor? Yes! Here are some approaches that may help reduce the influence of AI on student work . . .

Really Talk About Expectations

Award space in your syllabus, time in your class, and room in your directions for sharing with students your expectations regarding AI use and why you consider it to be a positive/negative, allowed/not allowed component in your teaching. Surveys reveal there is great confusion amongst students regarding how they can use AI as well as great fear over being accused of using AI when they haven’t. Discussing all the why’s and what-if’s promotes transparency, dialog, and engagement and provides students a platform for sharing and reflecting.

Learning Outcomes

Review your student learning outcomes/objectives. Can Artificial Intelligence assist students in achieving any of them or is AI not a good fit? This may help you determine if limits against AI can be graduated depending upon what you are asking students to do.

Timing

A common justification for cheating or cutting corners is “I didn’t have the time.” A great deterrent to using Artificial Intelligence to rapidly complete a project is to allow more time for completing work. Review your semester schedule and see if more time can be added for more important/point-heavy assignments or if you can introduce flexibility in your late policy. Additional time may help reduce student stress and thus the urge to resort to quick-fixes.

Scaffolding

In conjunction with timing, is it possible to breakdown complicated and elaborate assignments into smaller components? Can aspects of summative assessments be submitted over the course of days or weeks?

Presentations

Rather than have students present their arguments, ideas, and conclusions in text, have them share that information via presentations – which they can record. Emphasis can be placed on not reading their commentary . . .

Do It Yourself

Enter you assignment directions into an AI chatbot and see how well it does! Can it complete the project successfully enough to pass? If so, you may want to reconsider the nature of your assignment.

Authentic Assessment

Direct Reflection

When concerned about unauthorized AI use, or just as a means of promoting student learning, engagement, and communication, ask students specific questions about an assessment after they complete it.

Indirect Reflections

Whether students can use Artificial Intelligence or not, include personal reflection questions within assignments addressing how they approached the project. What sources did they use and why? What aspects were the most challenging and how did they overcome that barrier? Why did they answer the way they did? If they can rely on Artificial Intelligence, what tools did they pick and why? What were they hoping to gain from the technology?